There are various uses of being able to identify and locate object (hands) in an image. For example, if we can successfully detect and localize the hands in image (and video) we can definitely use this for gesture recognition and carry out multiple operations based on the same. Some of the oldest and working application of this kind of technology that I can recall are PS3 or MS kinect based games. PS3 used a camera and movement controllers whereas Kinect did not use any movement controller they carried out skeletal tracking of body itself.

Though we may apply the algorithm for object detection on images, but actual object recognition will be useful only if it is really performant so that it can work on real time video input. Alongside it being superfast the algorithm needs to work for different users and different locations and different lighting conditions. In the section that follows I will discuss different options that we have available and which ones can be useful based on the criteria we have defined above.

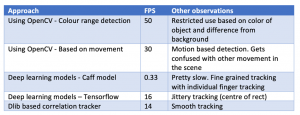

The list of ways how I think we can do object recognition in real time with different approaches we have at our disposal.

Broadly I think it can be done:

1. Using OpenCV – Colour range detection

2. Using OpenCV – Based on movement (will only work for videos)

3. Deep learning models – Caff model

4. Deep learning models – Tensorflow

5. Dlib based tracking

Will discuss all the approaches a little bit and carry out experiments to see which one works.

Just keep a note that all the experiments are done on my laptop with following configuration

Macbook Pro, 2.2 GHz i7 processor with 16 GB ram

1. Using OpenCV – Colour range detection

Since the colour of object will differ significantly for each user (even for hand), and this will differ quite a lot based on lighting conditions, hence we would need the user to be able to first provide us the object colour that we can track. Based on the colour detected, we will need to create a range that we shall track in the image (video frame).

Note: For this approach to work the color we are tracking needs to be significantly different from background else our application will identify all the objects from background as our object of interest.

This is a significant restriction on the use of this method for hand recognition since hand can very easily be confused with human face color etc as well. However, if we let the user use some object with a very distinct colour object then this method can be very effective.

As can be seen in the video above, we start by selecting and training for the color that we want to track in the video (green), which is significantly different from all colors we have in the background. However we notice that since there is sunlight in the part of the area which makes sharp difference in illumination of the object that we are tracking and hence we loose track of the object almost immediately. However, later when pull the curtains and make the whole are similarly illuminated, the tracker works really well. I tried to use different color objects and both work work. Point to take a note of here is that the FPS of the video is 50 frames per second which is really fast.

2. Using OpenCV – Based on Movement

Movement makes us easily separate out background from foreground (assuming there is no movement in background ). There are multiple ways to detect movement in a video. OpenCV provides ways to create background

and then you can easily use absdiff as indicated in in this link to find difference between current frame and background to localise the movement.

Once you have done that, you can segment out the region of movement and then try to locate the Object of interest (hand) in the region. In our case we then used the topmost part of the contours detected as the area that we want to track.

As can be seen in the video above this approach works really well generally, however, the biggest issue is it’s not easy to distinguish the movement of object of our desire (hand in this case) and other moving objects, my head and face in this case. The moment my hand movement is lower than my head, the tracker goes to my head because we are tracking the highest contour on the screen. The processing rate is reasonably fast at 30 frames per second.

3. Deep learning models – Caffe

One of the best part of using caffe based models is that it can easily be used with OpenCV. There is a caffe based deep learning model that can be used to detect and locate hand and related keypoints. This can be very useful in detecting the hand in image and fingers in an image. The details of the same can be found at this blog

As can be seen above, I tried this model, however, the performance on my laptop was far from realtime (almost 0.35 fps) and hence did not explore this option any further. Though this option does provide very fine level control in the sense that we can detect individual fingers and multiple tracking hand points.

4. Deep learning models – Tensorflow

Tensorflow is a deep learning library which can be used to develop any object detection. For our test we are going to use ready to use hand detection available at https://github.com/victordibia/handtracking. It seems like a very good model with relatively good speed as well. This has also been wrapped into a easy to use JS based library.

This library was developed on tensorflow 1.X and I am using tensorflow 2.X (python 3.7) for my experiments. I did not want to change the libraries as that may also impact the performance measurement. So I modified the code a little bit to work with tensorflow 2.0. Now many changes were needed though.

As can be seen in the video, hand tracking works well and is reasonably fast at around 16-17 frames per second. Very close to what Victor (author) has claimed in his blog.

5. Dlib based tracking

Though Dlib does not provide any model to detect objects (hand) out of the box (that I am aware of), however it has a powerful function that can effectively be used in combination with a deep learning models. Since, deep learning models are generally slow so combining 2 approaches can be a win-win which is why I have listed this option here.

Dlib is a powerful library that offers a very useful feature of correlation_tracker

Correlation Tracker can be used to track any object in the subsequent frames of the image. You have to initialise the library with the original frame of the object that you want to track (tricky part) and once you have done that then you can track that object in the subsequent frames of the video. However, if the object gets hidden due to any reason such as it goes behind any other object or gets masked from our video as it was not moved for sometime, then we need to reinitialise the object for Dlib to track.

As can be seen in the video, we initially track the object (hand) using deep learning model from step 4. As long as hand is in green bounded rectangle it is being tracked by deep learning model. However, once we are confident that deep learning model has detected the model then we pass on the object location to dlib correlation tracker (tracking changes to teal) and object tracker associated confidence is displayed on the screen. The dlib based tracker looses tracking of object as soon as object changes shape. So, in this example I have supported the dlib tracker deep learning model. As soon as dlib confidence goes below the threshold (8 in my case) I reinitialise the tracker object from deep learning model (we can clearly see switching between green and teal in the video multiple times) FPS in this case seems to be around 14 which is lower than standalone deep learning model. However, the tracking seems more smooth (less jittery compared to tensorflow based deep learning model)

Summary

Below is the summary of all the methods that we have looked at.

Will share code for all the approaches very soon.

Future work:

1. Combination of approach 1 and 2 will help us very easily locate object of interest even if its not very different from objects in background of if there is some movement in background as well.

2. Should try models based on Pytorch for deep learning models

If there are other options you think I should try please comment below.

References:

1. Interesting blog post that uses skin colour identifications https://medium.com/@soffritti.pierfrancesco/handy-hands-detection-with-opencv-ac6e9fb3cec1

2. Caffe based model for hand key point detection https://www.learnopencv.com/hand-keypoint-detection-using-deep-learning-and-opencv/

3. Tensorflow based deep learning model

a. https://github.com/victordibia/handtracking

b. https://medium.com/@victor.dibia/how-to-build-a-real-time-hand-detector-using-neural-networks-ssd-on-tensorflow-d6bac0e4b2ce

4. Lots of very useful articles on OpenCV and deep learning

a. https://www.pyimagesearch.com/2016/04/11/finding-extreme-points-in-contours-with-opencv/

5. Dlib correlation tracker http://dlib.net/correlation_tracker.py.html

6. OpenCV based movement detection https://docs.opencv.org/2.4/modules/imgproc/doc/motion_analysis_and_object_tracking.html