There are multiple tutorials available to understand the concepts of Artificial Neural Networks (ANN) and I do not want this blog to again talk about the same things that have been said so many times and in better ways. In this blog I am going to focus on what all points you need to keep in mind when going through ANN concepts so that you understand ANN at much deeper level to be able to code it by hand. Essentially in this blog I am going to highlight some key points that you need to focus on so that you are able to write your own code to implement neural network.

As I already mentioned above there are many tutorials available on the web, however, each tutorial / write up uses slightly different naming conventions and it is very easy to get confused with the same. Hence, I recommend going through ANN videos by Andrew Ng (link). (This link is for the complete machine learning course, if you want to only focus on ANN then go through lecture series 8 and 9). Once you understand the concepts and naming conventions then you can read up as many papers as you like to solidify your understanding . Also, while you can read up multiple tutorials to understand the concept, however, when implementing neural network try to stick to just one naming conversion.

Forward Propagation

Back Propagation

Key Concepts that you need to really focus in detail on for implementing Neural Networks include:

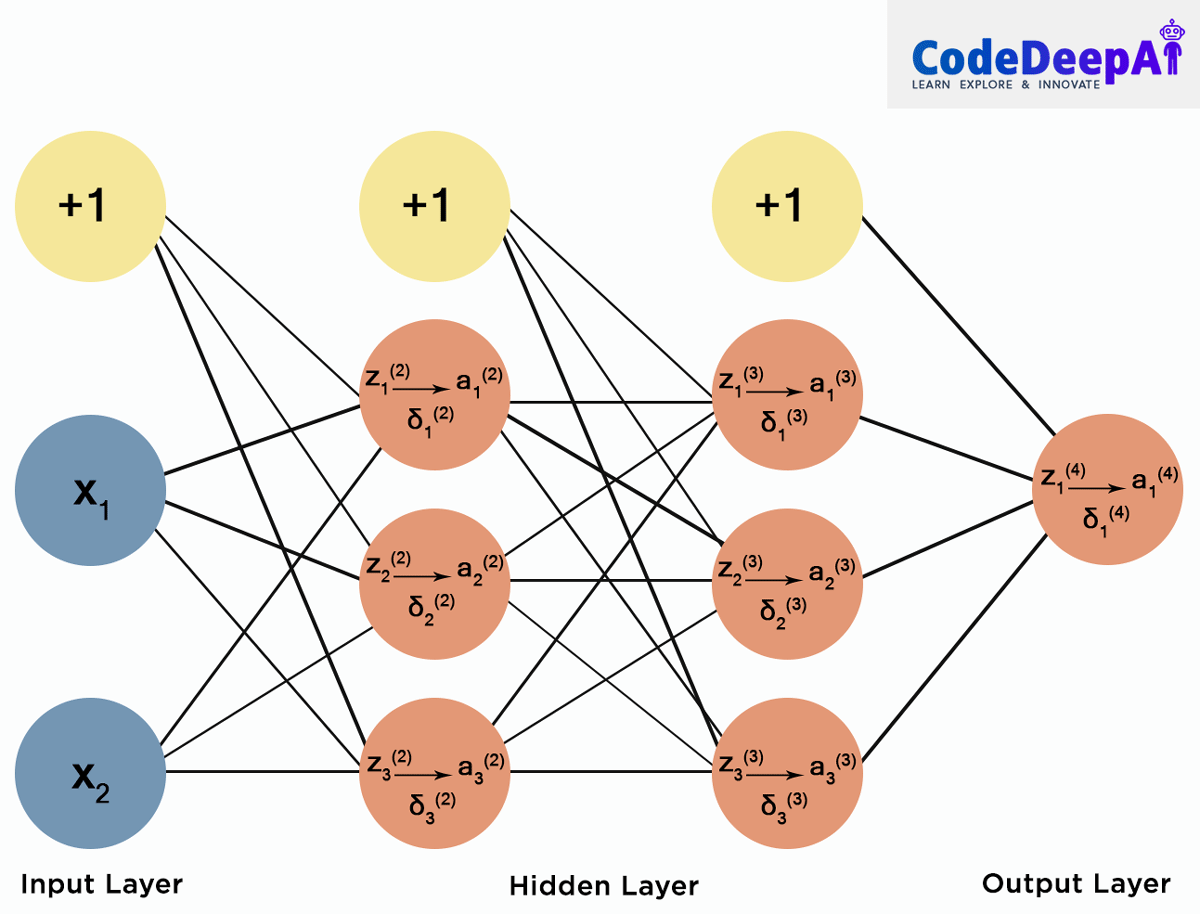

- Layers: This is the total number of layers of neurons. For example, in the above diagram we have a 4 layered neural network.

- Layer 1 is also called Input layer

- Layer 2 and layer 3 are Hidden layers

- Layer 4 is Output layer

- Nodes: Each neuron is referred to as node. For example, we have 2 nodes in layer 1, 3 nodes in layer 2 etc. in above diagram. Keep a count of number of nodes in each layer as this will be very important.

- Bias Node: We generally add 1 bias node to all the layers except output layer.

- Weights (or parameters): These are the numbers that we optimize / learn in the neural network training process. It is very important to note that in a simple feedforward NN, each of the node from layer 1 is connected to all the nodes in layer 2. Since we have 3 (2 nodes + 1 bias node) in the input later and 4 (3 + 1) in the nodes in the second layer, we will have total of ((2+1)*(3+1) =) 12 weights. The weights for layer 1 are the weights that are applied to inputs from layer 1 to get activation for layer 2.

- Activation and its calculation: When you go through the ANN tutorial, you really need to understand the calculation of activation. Though conceptually, it’s not very complicated, it’s just sigmod of weighted sum of input, but you need to clearly understand the vector representation and how the calculation is carried out.

- Forward propagation: The above calculation of activation for each of the layer one after the other from input layer to output layer is called forward propagation. Once you understand the calculation of activation for one layer then it’s easy to apply the same for all layers one after the other up to output layer. Once you have the activation for the output layer that’s called the output of the network.

- Error Calculation: This simply refers to difference in the value output by the network in the output layer and the actual value (as we have it form labelled training set). It’s very simply calculated by computing the difference between neural network output and actual value.

- Error back propagation (Calculate delta): The error calculated in the output layer is then back propagated to find the error in each of the nodes in each layer. Delta (?) is analogous to activation in forward propagation. Delta is computed for all the nodes in the network (except the nodes in the input layer). Pay careful attention to the formula for delta calculation.

- Weights update: Once you have calculated delta (?), then you use delta for layer 2 and activation of layer 1 to come up with the weights update.

Summary

- You need to understand the concept of how weights relate to the layers. Remember weights for layer 1 are weights between layer 1 and layer 2. And we will have total of (number node in layer 1) * (number node in layer 2). So, in our example above we will have

[array([

[ W00, W01, W02, W03],

[ W10, W11, W12, W13],

[ W20, W21, W22, W23]]),

array([

[X00, X01, X02, X03],

[X10, X11, X12, X13],

[X20, X21, X22, X23],

[X30, X31, X32, X03]]),

array([

[Y01],

[Y11],

[Y21],

[Y31]])] - You need to understand that delta relates to the nodes same as activation

- How to calculate changes in weight based on delta for each node. The weight update matrix needs to be of same dimension as the initial weights themselves (number node in layer 1) * (number node in layer 2)

- All this requires good grasp of numpy arrays and command on following concepts

- Transform

- Dot product

- Add column in a 2d array (add bias etc)

If you have paid detailed attention to the above points in understanding neural network, you are all set to start the implementation.